Email: qin.ca[at]northeastern.edu

Hello and welcome! I am working at Google CoreML, where I focus on building Multimodal and GenMedia models, working on systems that can understand and generate across text, image, video, and other modalities. Before joining Google, I was at Salesforce AI Research, contributing to multimodal and generative models. I received my Ph.D. from Northeastern University in Boston, and during my studies I interned at Adobe and Salesforce, gaining hands-on experience in real-world AI research. I am passionate about the future and want to push the boundaries of what AI can see, reason about, and create.

news

| Jan, 2026 | I have jointed the Google, working on Multimodal and GenMedia! |

|---|---|

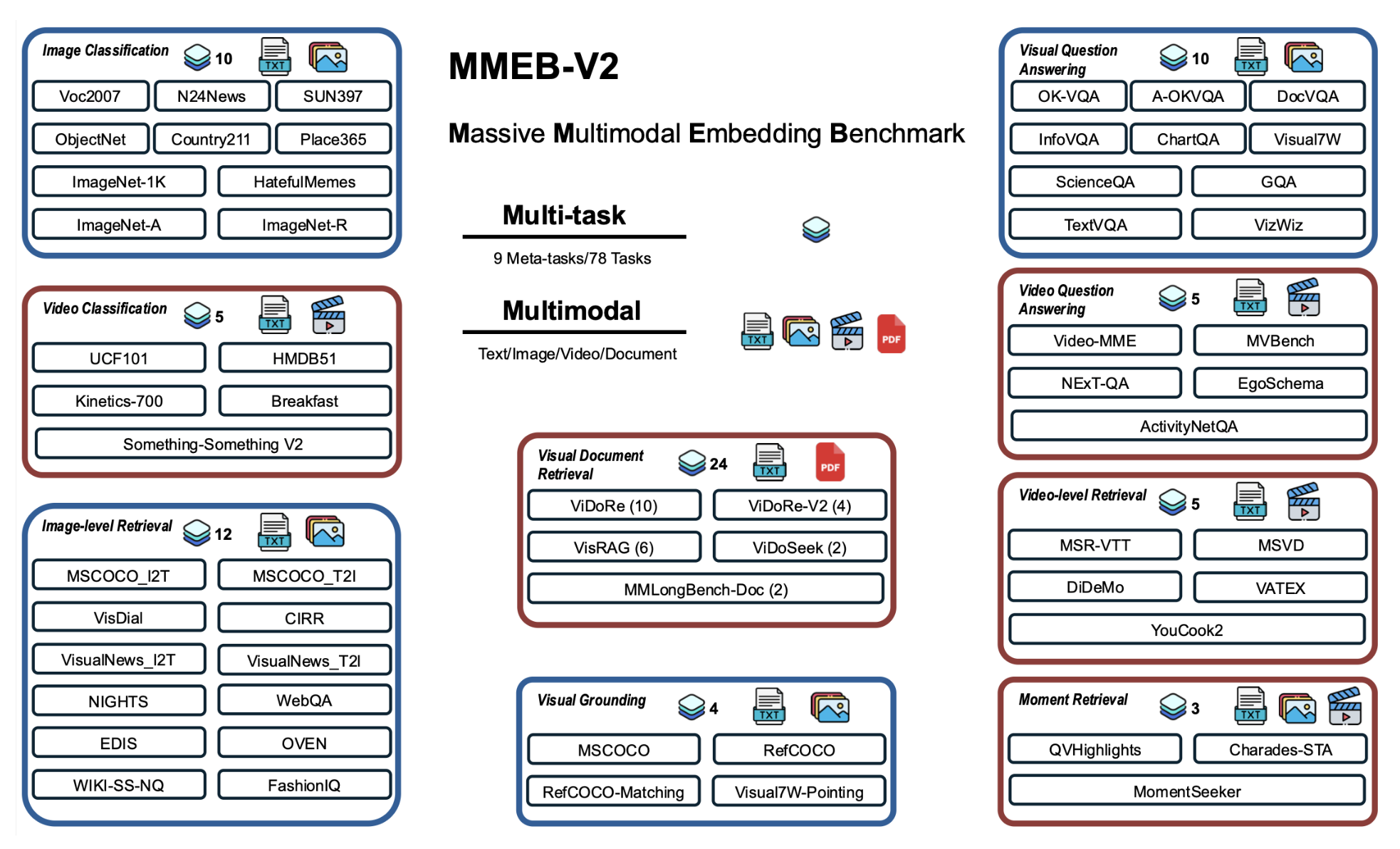

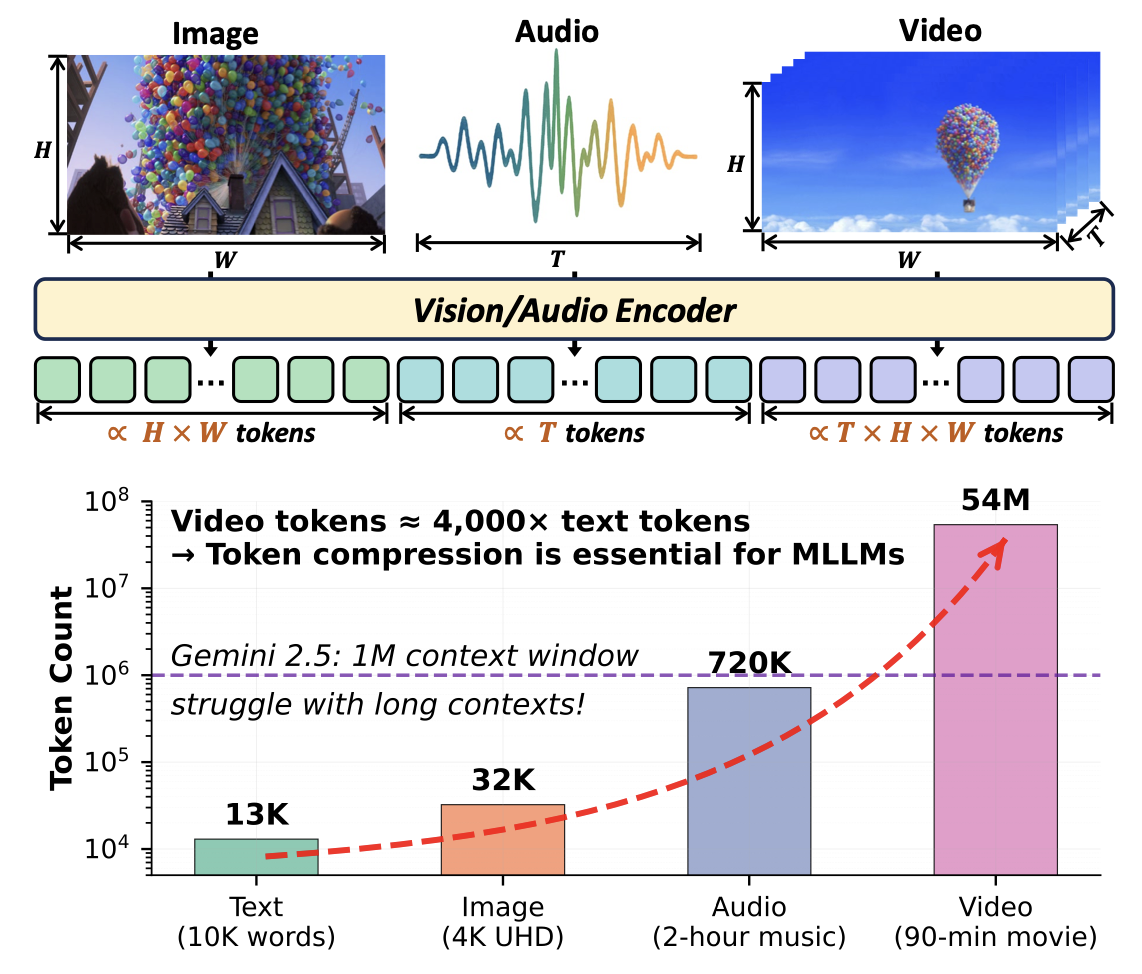

| Dec, 2025 | Vlm2vec-v2 (MMEB-V2) and our MLLM token compression survery were accepted by TMLR. |

| Oct, 2025 | Holitom was accepted by NeurIPS 25. We have released the CoDA (a 1.7b coding DLLM model). |

| May, 2025 | CogAlign was accepted by ACL findings and we have released BLIP-3o. |

| Feb, 2025 | We have two papers accepted by CVPR 25! Our latest paper CogAlign was released. |

| Sep, 2024 | Our Medical MLLM paper was accepted by EMNLP 24 (Main)! |

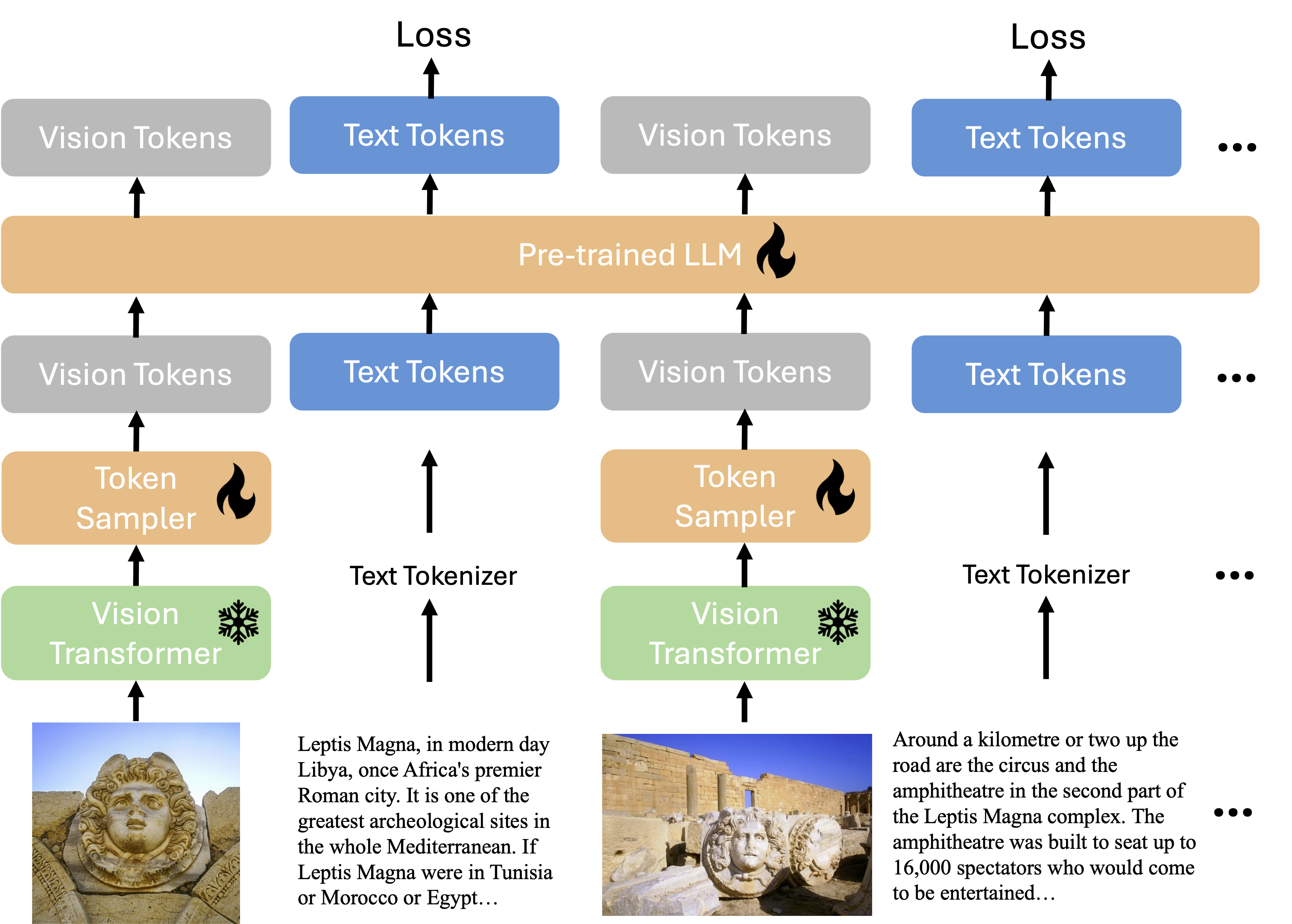

| Aug, 2024 | The xGen-MM (BLIP3) and xGen-VideoSyn-1 were released to the public! We have a paper accepted by TKDE and congrats to Yizhou! I have been invited as the reviewer of Nature Communications. |

| Jul, 2024 | We have one paper accepted by ECCV 24! |

| Feb, 2024 | We have one paper accepted by CVPR 24! |

| Nov, 2023 | Begin my journey at Salesforce Research in Palo Alto! |

selected publications

-

xGen-VideoSyn-1: High-fidelity Text-to-Video Synthesis with Compressed RepresentationsarXiv preprint arXiv:2408.12590, 2024

xGen-VideoSyn-1: High-fidelity Text-to-Video Synthesis with Compressed RepresentationsarXiv preprint arXiv:2408.12590, 2024